A presentation based on the concepts and ideas from this article was delivered to the San Diego Kotlin User Group in March 2021. Video of the presentation can be viewed on YouTube.

In Part 1, we attempted to evaluate whether existing NFL Win Probability models are useful and/or accurate. Proponents of the models, including their builders, claim that while not perfect, in most scenarios the margin of error is small, and much of their value comes from monitoring changes in WP, allowing observers to determine the degree to which a specific play, or series, increased or decreased a team's WP. I agree these are legitimate uses for general managers, coaches and play-callers. But the vast majority of the public only hears anything about WP in the context of a blown lead ("Atlanta had a 99.8% chance to win the Super Bowl!"), and the models are quite inaccurate at the extremes, often under-estimating the likelihood of a comeback by a factor of 2, 5, even 10 or more. Since WP models simply don't work at the only times the general public cares about them, I conclude that yes, the models are broken.

We can study the non-proprietary Win Probability models and start to identify reasons why the models feel big comebacks are so unlikely. The models all use historical NFL data, and from that, use a variety of techniques and formulas to forecast a WP for all in-game scenarios. This immediately leads to a clear problem: the NFL only plays 256 games each season (compared to 1230 in NBA, and 2430 in MLB), and in total, about 40,000 plays are run per season (vs about 185,000 plate appearances in baseball, or 220,000 field goal attempts in the NBA). When you combine all the combinations of field position, down-and-distance, time remaining, and point differential, you'll realize there aren't enough plays to cover all of the scenarios that might arise! Even after compiling stats from multiple seasons, and binning (i.e. instead of 100 distinct yard lines, 20 bins of 5-yard segments), lots of scenarios have very sparse historical data from which to learn. So any model builder starts out at a disadvantage when trying to predict NFL outcomes, compared with other sports.

Focusing specifically on scenarios where WP exceeds 90%, or 95%, amplifies the sparseness issue. Far more plays are run when a game is still competitive, than during a blowout. So there are far fewer data points available to train a model. And the data that does exist tends to suffer from a few issues:

- Historical data where a team's WP is very low (under 5%) is heavily skewed by bad teams. This isn't a surprise, what kind of teams tend to get on the wrong side of blowouts? Terrible teams. The following chart shows, from 2015-2019, the probability of trailing by 21+ points in the first half, by the total number of season wins. Therefore, when we get to the playoffs, or Super Bowl, and one team is way behind, the WP model is basing its forecast mostly on what very bad teams would do. In other words, when Patrick Mahomes and the Chiefs were down 10 points facing 3rd-and-15 at their own 35 and just 7 minutes remaining in Super Bowl LIV, WP models had to base their predictions (about 4% WP) on data from guys like Sam Darnold or Mitch Trubisky or Jameis Winston, because those guys often are losing late, while QBs like Mahomes aren't. The fact that the Jets or Bears are unlikely to pull out a win shouldn't apply to the Chiefs, who clearly have superior talent.

- Models don't account for how a team found itself trailing. In high-profile and playoff games, deficits tend to occur when highly unusual things happen, which aren't likely to continue throughout the games. But in the historical data, big deficits tend to occur because one team is simply better and is able to neutralize the other. When unusually negative things caused the deficit, we should expect that team to perform better going forward because those rare, negative things probably won't re-occur. On the other hand, when the deficit is caused by normal play, or when negative plays aren't unusual, then we shouldn't expect a team to improve and somehow overcome the hole it's in. A few examples:

- Super Bowl LIV was tied 10-10 at halftime, San Francisco built a 10-point lead in the 3rd quarter as both Chiefs drives in that quarter ended in Mahomes throwing an interception. Mahomes threw just 5 picks all season, and never twice in a single game. While winning the game wasn't certain, observers could be fairly confident that Mahomes's 4th quarter would be much better than his 3rd.

- Houston built their 24-0 lead over Kansas City in the Divisional Round with help from a blocked punt returned for a TD. They held Mahomes to just 1 first down in his first 3 drives, while the Texans scored 2 TDs and an FG on their first 4 drives, in addition to the blocked punt. Much of this was highly unusual -- a special teams TD, Mahomes's ineffectiveness, and also the speed of the early scoring. Houston went ahead 24-0 in the 21st minute. Of the 3 other games played that same weekend, the 24th point (both teams combined) was scored in the 29th, 30th, and 39th minute. Again, a repeat of a blocked punt wasn't likely, nor was Mahomes failures to generate first downs. No guarantee the Chiefs could overcome 24-0, but statistically, the last 3 quarters were vitually guaranteed to be much better than the first.

- Super Bowl LI, NE-ATL, 2 of New England's first 5 drives ended in turnovers (the other 3 were punts). LeGarrette Blount fumbled at the Atlanta 29. Blount had only fumbled twice all season on 299 carries, both back in September. Similarly, Tom Brady had only thrown 4 INTs all season (including the earlier playoff games), none of which were pick-6'd, but the Robert Alford of the Falcons returned his interception 82 yards for a score to lead 21-0. The Patriots needed a lot of things to happen to win the game, but fortunately for them, they were extremely unlikely to commit another 2 turnovers in the 2nd half.

- Contrast these situations with the five 16+ point blowouts in Week 3 of the 2020 season. The losing teams were Jacksonville, Las Vegas, Denver, the Jets and the Giants -- a combined 3-12. None of their opponents needed a special teams TD or a pick-6 to build a big lead. Sure there were plenty of turnovers, bu that wasn't unusual for these guys. It was Sam Darnold's 7th multi-INT game in 30 career starts. In Denver's case, they were down to their 3rd-string QB. Jeff Driskel and Brett Rypien's inability to overcome a 23-3 is now part of the historical data that will one day be used to calculate Win Probability for guys like Mahomes or Lamar Jackson or Russell Wilson.

- Of course, simply not repeating unusual mistakes isn't enough to ensure a victory. San Francisco reached Super Bowl LIV by crushing Green Bay, leading 27-0 at halftime. Aaron Rodgers fumbled a snap and threw an INT in the first half, and Green Bay only crossed the 50-yard-line once. ESPN calculated SF's WP at 99.6%. Rodgers was much more recognizable in the second half, leading 3 straight touchdown drives of 75, 75 and 92 yards. But the 49ers added points as well, and the Packers never got closer than 14 points.

- Most models consider only point differential, not each team's score. Real quick: who do you think is more likely to make a comeback, a team losing at halftime 21-0 or 35-14? Both are losing by the same 21 points. But the first scenario, where one team has failed to generate any points, we would expect that that team will struggle more in the second half than the team that already scored 14. Additionally, a low-scoring game naturally has fewer scoring opportunities, therefore the likelihood that the trailing team can generate enough points to overcome the deficit is lower. In a very simple model where we take the football skill out of the equation and replace it with coin flips, a team down 3 scores (i.e. 21-0) with 3 scores remaining in the game needs all 3 scores to go its way, which would result in a tie. Probability of winning is equal to getting 3 of 3 coin flips to land Heads, 12.5%, times 50% of winning the tiebreaker (i.e. overtime), or 6.25% overall. Now consider a game where a team trails 5 scores to 2 (i.e. 35-14) with 7 more scores remaining. There are 128 combinations of 7 coin flips. Getting 6 or 7 Heads will win the game outright, and 5 Heads will tie. P(6 or 7) = 6.25% and P(5) = 16.4%. In total, 6.25 + (16.4 / 2) = 14.5% chance of winning, 2.3 times more likely than when you need 3 of 3 in order to tie. Obviously real football games are far more complex, but this quick demo shows why simply using point differential, and not the scores of both teams, is not sufficient.

- Not all models include timeouts. A team's timeout inventory is essential to understand its ability to mount a late comeback. This is obvious to any casual viewer, as the ability to prevent an opponent from simply running out the clock potentially gives a team an extra offensive possession. On rare occasions even two, given the 2-minute warning and some clock mis-management by the opponent. Timeouts also increase the likelihood of success of a final offesnive drive, as the quarterback is not limited to sideline throws, the running game is viable, and the offense can simply run more plays if it is able to stop the clock.

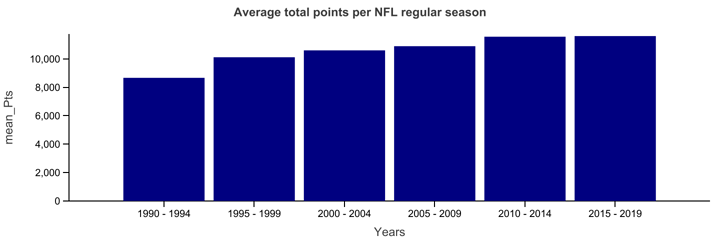

- Models may use historical data that's too old. Points and touchdowns have been on an upward trend for the past 30 years, at least. The plots below, based on data gathered at pro-football-reference.com show both points and receiving touchdowns steadily marching upwards (we focus on Receiving TDs because of the emphasis on passing when a team is behind). Binning the years into groups of 5 removes anomalies, clarifying the upwards trend. Because NFL data is sparse, as discussed earlier, it may be tempting to use 10 or 20 years of data in order to solidify the data set. But as the binned graphs show, looking backwards more than just a few years is likely to include data that doesn't reflect the current state of QB talent, offensive aggressiveness, and the ability to quickly pick up large chunks of yards when needed. This is even more noticable when we repeat the analysis, focusing purely on passing efficiency statistics -- adjusted yards per attempts and passer rating.

![[object Object]](/static/avg_rectd_binned-8b2a29a81901f6ecaaf717227706b28c.png)

Link to Jupyter Notebook used to create similar graphs of QB Rating and Avg Yds/Attempt.

- Finally, this is an observation, but from watching a lot of football for many years, it just seems that more strange things happen as the spotlight gets bigger, especially in Super Bowls. There's a combination of ultra-high pressure of win-or-go-home in every playoff game, players with a no-holds-barred attitude, and coaches who empty their bag of trick plays. I'm not sure Peyton Manning ever had the first snap go over his head for a safety, except for the Super Bowl. Sean Payton probably never called a surprise onsides kick, except in the Super Bowl. Although Mike Ditka loved to find creative ways to use William Perry on offense, only in the Super Bowl did he attempt a pass (he was sacked). Thurman Thomas never lost his helmet, except for at a Super Bowl. Both of New England's Super Bowl losses to the Giants were the Patriots' lowest-scoring games of the entire seasons. I admit to some cherry-picking here -- anything that happens in a Super Bowl game is obviously more memorable than just some random Week 6 where something unusual takes place. But just as some players succumb to the increased pressure, I think some coaches make more sub-optimal decisions in Super Bowls, which both causes unusually large deficits and then prevents a team from protecting its lead. In other words, all other things being equal, the probability of a blown lead in a Super Bowl is naturally going to be larger than the probability of blowing the same lead in a regular-season game.

While taking a look at the non-secret WP models, it does appear that some of these issues are starting to be addressed. The nflfastR model is the newest model I'm aware of, and it's open-source. It is an evolution of an earlier open-source model, nflscrapR. (Both are written in the R language, which is the reason for the 'R' flair at the end of the names). nflfastR is much newer than other models -- any that evaluated the New England / Atlanta Super Bowl are at least 4 years old. Brian Burke, now at ESPN, first published WP charts about a decade ago. Meanwhile nflfastR was first released publicly in April 2020, so we would expect that it attempts to improve upon inaccuracies in earlier models. From a post describing the composition of the nflfastR model, we can see it does include timeouts. The authors have also built an Expected Points model which includes home/away, which distinguishes historical data by "era", and even includes dome/outdoors. Hopefully these factors will soon make their way into the WP model as well.

Probably the biggest change modelers can make, especially when they are evaluating elite teams, is to recognize that historical data based upon weaker teams simply doesn't apply. Such data is more likely to introduce inaccuracy, rather than reduce it. We demonstrated above that the vast majority of historical data regarding the probability of large comebacks is based on weak teams' inability to do so. But elite teams, especially elite offenses, can. As an example, we can look at Brian Burke's tweet after the NE/ATL Super Bowl:

Since '01, teams down between 26 and 23 points with 6-9 min left in the 3Q were 0-190, now 1-191 (about .5% win rate).

��— Brian Burke (@bburkeESPN) February 6, 2017

This tweet demonstrates the difficulty of estimating a probability for something that had never occurred before. Whether past teams were 0-190, 0-19, or 0-1900, the historical data's win rate is zero. However, it's a mistake to assume that something cannot happen just because it has not yet happened. Statisticians would likely approximate the true probability using the "rule of three", arriving at a 95% confidence that P(NE comeback) < 3/190 or something under 1.5%. But if you throw out all the games where the trailing team was a sub-10-win team, you're left with only about 15% of the games, so the proper formula is closer to 3/28, which says our confidence window should extend from 0 to about 11%. Even some of that 15% can be filtered out -- games with a significant injury, Week 17 games where starters are resting, games where the trailing team did not possess the ball until well after that 3-minute window had elapsed -- and each time we do, it reduces the denominator in the "rule of three" formula, which further grows the confidence window of the true probability. We still don't have a definitive number, but the model is no longer restricted to assuming a value that must be under 1.5%, and is more likely to converge on a value in the range of 4 to 7%.1

Given the improvements we are able to see in the development of the nflfastR model, we're optimistic that its authors will re-calibrate it to increase accuracy at the extremes and reduce the current over-confidence. Most likely, world-class mathematicians will use much more sophisticated methods than the simple "rule-of-three" and other approximations. Unfortunately, however, this takes time, and ESPN and the NFL have a much larger audience than a small team of academics. Although the television network and the league will also probably refine their model, I have a feeling they will continue to mis-inform the public for a few more years.

Footnotes

-

I did not repeat Burke's study of games between 2001 and 2016, however I did look at all games from 2015-2019. 78 games fit Burke's parameters, a 23 to 26-point lead with 9 to 6 minutes left in the 3rd quarter. Except for the Patriots in the Super Bowl, none of the other 77 teams successfully came back to win. However, instead of assuming 1-77, rejecting all the games where the trailing team was not a 10+ win team left us with a 1-10 record (as in the example described in #1 above, the probability of weak teams falling behind is far greater than strong teams). A few of those games were affected by injury or player rests, leaving only 9 comparable games, or 12% of the original sample. Following this pattern is how we assume that instead of 190 comparable games, only about 15% or fewer of those 190 are actually a fair comparison. Jupyter notebook here. ↩