No, you do not need expensive campaign management software. SQL can handle your entire campaign.

1. Query a data warehouse, not the source Casino Management System. The ability to access and query the source CMS directly is terrific when you’re building real-time reports like dashboards, heat maps, or player tracking. But segmenting players relies on historical data, and having a well-structured data warehouse enables your team to pre-process data, write custom views, define custom calculations, and speed up queries by defining indexes and relationships in your tables.

2. If you are running any long-term tests, assign all players to a test group before performing any segmenting. Check that the test groups are equal (or appropriately proportional) in size, worth, and demographics. Assignments must be persisted in the database to assure every player will remain in the same group over the complete length of the test.

3. All offer information is stored in the database. All elements of all your offers, like dollar amounts, start/end dates, variable text copy, etc. should be INSERTed into tables prior to running a segmenting queries. Use Excel to build custom spreadsheets for use among your team (for example, to prepare sets of offer dates) which ultimately generate INSERT statements that you can easily copy and paste into your SQL IDE. The goal is to have the entirety of all the offers in the database, to be collected and assigned via SQL, eliminating the need for time-consuming, error-prone, post-processing in Excel or some other tool.

4. Enable flagging specific individuals for an override or customization. Your marketing executives or hosts may have made arrangements with specific players for any number of reasons, so that the offer those players receive is different than whatever is generated by your calculations. Making manual adjustments to output files is likely to be a major source of errors and a huge time drain. Of course, if the custom arrangements are completely outside the parameters of the offer, this may not be possible. But if it is something simple like promising a consistent $100 free slot play award every period, then it is easy to set the database up to always offer this player $100 even if the calculated offer is for a different amount.

5. Use predictable, meaningful offer codes that are constructed, rather than assigned. With a bit of creativity, you can pack a lot of information into even relatively short codes. For example, a single digit can identify 3 concurrent A/B tests.

Use single characters to represent worth segments, frequency/recency segments, geographic segments, and offer dates and sequence. In many cases, 6 or 7 characters is sufficient to completely describe the segment. But if your CMS is simply too unaccommodating and there is no way to consolidate the codes, you should still construct meaningful offer codes and map them to a series of CMS-friendly codes in another database table. This way, you still have meaningful codes to work with in every part of your internal analysis and process, and when you are forced to work with the CMS codes, you can quickly translate them into the meaningful ones.

6. Create segments even for players who don’t qualify for the offer. These segments won’t receive an offer, but you will be able to easily identify players who missed out. Later on, when you are reviewing player histories, you or your analysts can verify that they were evaluated, and know the reason(s) they didn’t qualify for that specific offer. Not enough visits? Daily value too low? The alternative is not to assign any segment to non-qualifiers, which will make any post-analysis that includes these players (for example, comparing players who received your lowest offer to those with no offer) much more difficult.

7. Don’t export your data mid-process to Excel to utilize its functions. Stopping your data flow mid-process because you need to utilize an Excel function slows you down and introduces all kinds of possible errors. SQL alone is very capable of handling date math, text manipulation, performing VLOOKUP-type categorization (both exact and inexact matches), determining min/max or first/last, computing standard deviations or covariances, etc. If you really need complex functionality, most RDBMS engines allow for custom functions written in a full programming language (i.e. C# for SQL Server, Java for Oracle, Python or others on Postgres), which is preferable to pushing data to Excel and back.

8. Break up long, complicated SQL statements by using Common Table Expressions (CTEs). CTE’s provide sanity to long, unwieldy queries. Instead of the inside-out logic flow of multiple subqueries, CTE’s allow for an orderly, top-down approach (except in Teradata, which implemented them backwards) that makes refactoring and debugging much easier. And since CTE’s can be referenced multiple times, by subsequent CTE’s or in your final all-encompassing query, they should speed up overall execution time.

9. Don’t make manual changes to your final, tested SQL statements. If you need to change a hard-coded date in the query each time it runs, replace it with a query parameter. This is especially true if the date (or text, or other number) needs to be set multiple times within a query. Utilizing a parameter ensures every instance of that value is identical. One of the easiest errors to make is to change a value in one part of a query and forget to change it somewhere else, or mistype it. Parameters eliminate this risk. The implementation is different across databases, as some databases (SQL Server is the easiest) allow for scalar variables to be DECLAREd inline while other databases don’t. Regardless, most SQL IDE’s have a mini-language for inline parameters and will prompt the user for values upon query execution.

10. Save all segment assignments, and the stats you calculated, in the database. Your analysts are going to need to access “before” stats in order to evaluate the impact of the promotion. So if you’ve already calculated ADW or counted visits, save those in the database along with the segment assignments. Over time, these stats combine to form a valuable historical record of players’ trends. These numbers come in handy for many reasons, including experimenting with adjustments to your segment boundaries or minimum/maximum qualifications.

11. After assigning players to segments, continue to use the database to connect each player with their offers. Earlier I stated that you should store all offer data in the database, this is the natural outcome of doing so. It may be tempting to use Excel to match up players and their segments with offers, or to utilize expensive, proprietary campaign manager software, but the fastest and most accurate method is to integrate the entire process together inside your database.

12. Test the feasibility of your output, checking for errors. Once players have been matched up with segments and with their offers, it is simple to calculate counts and sums to verify the data you just ran is in line with expected values. For example, comparing the number of players in each segment to the same segments in the last period. If your offers are dollar-denominated (free slot play, food comps), it’s easy to calculate the total dollars being offered, the largest and smallest individual offer, and aggregate offer (i.e. $100 food credit x 4 per month = $400 aggregate). These values should all be eyeballed to ensure they are in line with results of prior database runs.

(Bonus) 13. Use the database to generate all final output files. You likely have multiple endpoints (CMS, print shop, email template, web site), all of which expect data in different formats, with different fields, in different orders. It may be tempting to hand over a generic data dump and let someone else prepare the specific output files, but that leaves the process too vulnerable to errors that you’ll never see and won’t be able to prevent. SQL can almost certainly manipulate date formats and concatenate text strings required for your final output, and a good IDE will make it easy to run a prepared query per endpoint and export the results into whatever format you need, whether that is CSV, CSV with semicolons, XLSX, XML, JSON, or something else.

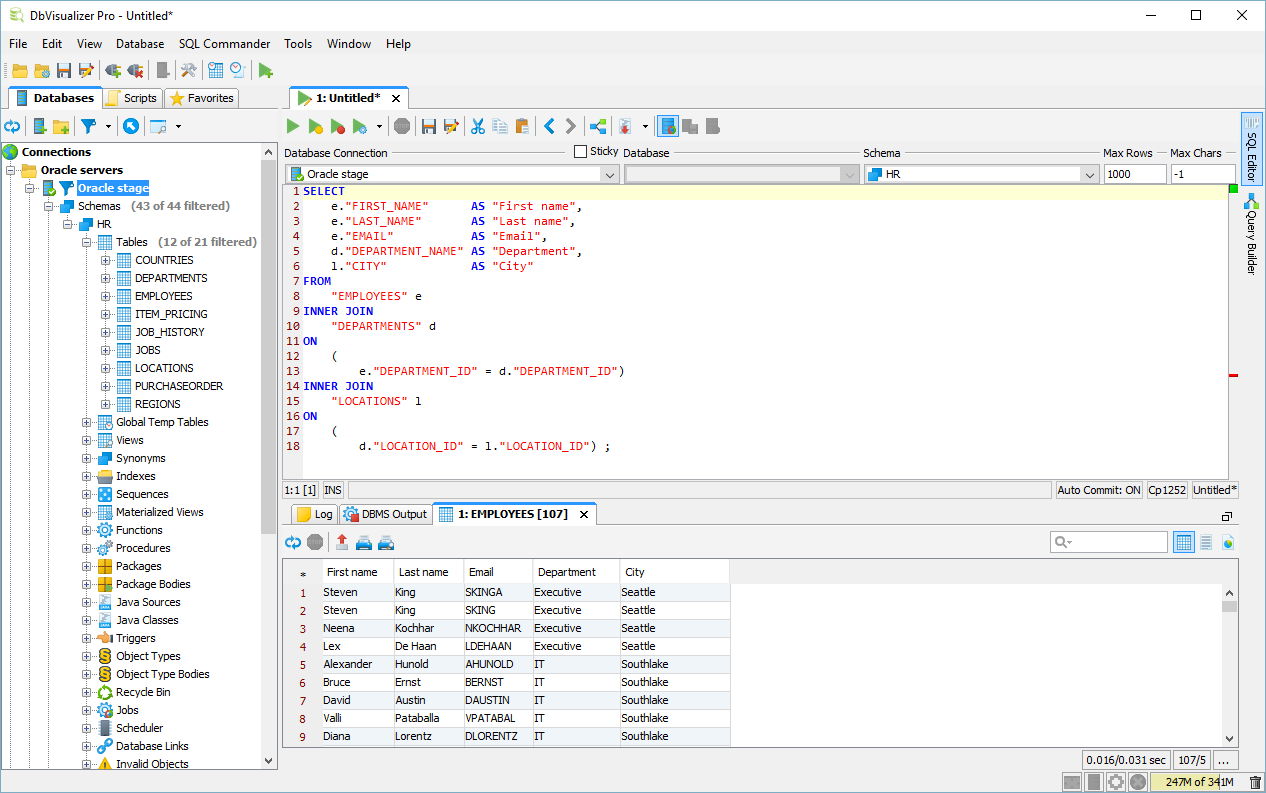

Plug: Multiple times I have mentioned features found in a “good SQL IDE.” While the default interface supplied by database vendors range from usable (Teradata SQL Assistant, pgAdmin) to pretty good (SSMS), none are as flexible or capable as a dedicated IDE. While there are a number of good choices (many of which I would like to try, but haven’t), the best tool I have found so far, overall, is **DBVisualizer**. It can connect to just about every RDBMS out there (including Teradata, although it isn’t listed), color-codes your queries, supports auto-completion and graphical query building (like Access), and enables inline data editing.

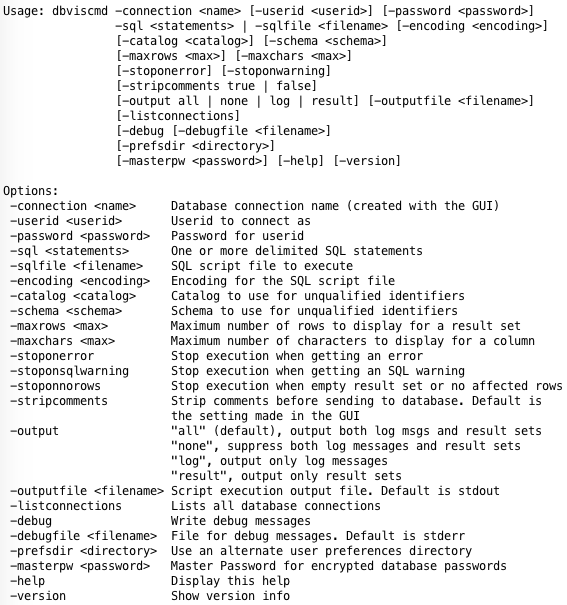

It can create display result data as a graph, which is great for data exploration. But among the coolest features are the ability to run the program from the command line, its support of its own parameter syntax as well as DBMS native syntax, and a mini-language specifying details of output formats. These last 3 points allow you to run a set of parameterized queries from a command-line script, totally automated, without a UI, saving the results in a specific output format. Very cool and very powerful, as it allows you to write a batch file or even better, use an ETL tool to automate multiple sequential queries. In other words, run nearly the entire process unattended. The DBViz support team also happens to be extremely responsive and constantly add features in response to user requests. For about $200, it’s no-brainer, given the huge productivity boost you’ll immediately experience.